Bonjour

Après pas mal de recherches, j’ai réussi à intégrer la gestion de la LED via des requêtes HTTP.

J’ai gardé le code actuel, sur lequel j’ai rajouté la LED via le asyncwebserver. Voici le code:

// SERVEUR WEB CAM From: https://community.jeedom.com/t/tuto-camera-a-focale-fixe-basee-sur-esp32/17587/49

// LED WIFI FROM: https://techtutorialsx.com/2018/02/03/esp32-arduino-async-server-controlling-http-methods-allowed/

// For installing Asyncwebserver: https://techtutorialsx.com/2017/12/01/esp32-arduino-asynchronous-http-webserver/

// modified by DRS - May 2020

//

//

#include <WiFi.h>

#include <WebServer.h>

#include <ESPAsyncWebServer.h> //permet la connexion simultanee pour la gestion de la LED

#include <WiFiClient.h>

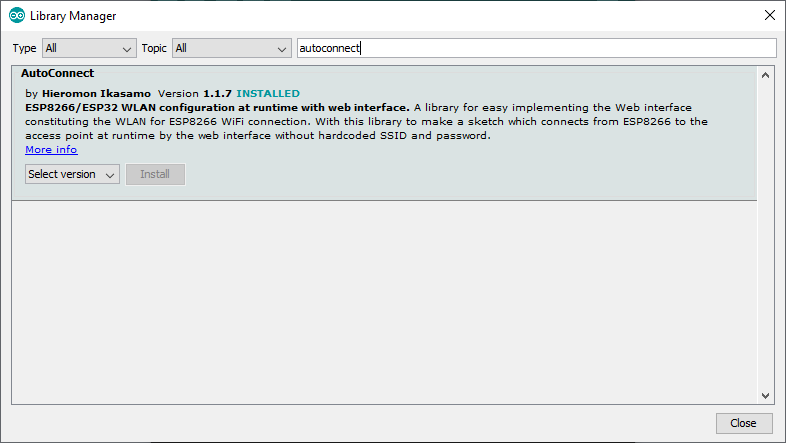

#include <AutoConnect.h> // Needs AutoConnect, PageBuilder libraries!

#include "soc/soc.h" //disable brownout problems

#include "soc/rtc_cntl_reg.h" //disable brownout problems

#define ENABLE_RTSPSERVER

#define ENABLE_WEBSERVER

#define RESOLUTION FRAMESIZE_XGA // FRAMESIZE_ + QVGA|CIF|VGA|SVGA|XGA|SXGA|UXGA

#define QUALITY 10 // JPEG quality 10-63 (lower means better quality)

#define PIN_FLASH_LED -1 // GPIO4 for AIThinker module, set to -1 if not needed!

#define LED_BUILTIN 4 // VA SERVIR POUR ALLUMER et ETEINDRE LA LED

#include "OV2640.h"

#ifdef ENABLE_RTSPSERVER

#include "OV2640Streamer.h"

#include "CRtspSession.h"

#endif

OV2640 cam;

boolean useRTSP = true;

WebServer server(80);

AsyncWebServer serverasync(81);

AutoConnect Portal(server);

AutoConnectConfig acConfig;

#ifdef ENABLE_RTSPSERVER

WiFiServer rtspServer(554);

CStreamer *streamer;

#endif

void setflash(byte state) {

if (PIN_FLASH_LED>-1){

digitalWrite(PIN_FLASH_LED, state);

}

}

void handle_jpg_stream(void)

{

WiFiClient client = server.client();

String response = "HTTP/1.1 200 OK\r\n";

response += "Content-Type: multipart/x-mixed-replace; boundary=frame\r\n\r\n";

server.sendContent(response);

while (1)

{

cam.run();

if (!client.connected())

break;

response = "--frame\r\n";

response += "Content-Type: image/jpeg\r\n\r\n";

server.sendContent(response);

client.write((char *)cam.getfb(), cam.getSize());

server.sendContent("\r\n");

if (!client.connected())

break;

}

}

void handle_jpg(void)

{

setflash(1);

WiFiClient client = server.client();

cam.run();

if (!client.connected())

{

return;

}

String response = "HTTP/1.1 200 OK\r\n";

response += "Content-disposition: inline; filename=snapshot.jpg\r\n";

response += "Content-type: image/jpeg\r\n\r\n";

server.sendContent(response);

client.write((char *)cam.getfb(), cam.getSize());

setflash(0);

}

void handle_root(void)

{

String reply = F("<!doctype html><html><H1>ESP32CAM</H1>");

reply += F("<p>Resolution: ");

switch(RESOLUTION) {

case FRAMESIZE_QVGA:

reply += F("QVGA (320x240)");

break;

case FRAMESIZE_CIF:

reply += F("CIF (400x296)");

break;

case FRAMESIZE_VGA:

reply += F("VGA (640x480)");

break;

case FRAMESIZE_SVGA:

reply += F("SVGA (800x600)");

break;

case FRAMESIZE_XGA:

reply += F("XGA (1024x768)");

break;

case FRAMESIZE_SXGA:

reply += F("SXGA (1280x1024)");

break;

case FRAMESIZE_UXGA:

reply += F("UXGA (1600x1200)");

break;

default:

reply += F("Unknown");

break;

}

reply += F("<p>PSRAM found: ");

if (psramFound()){

reply += F("TRUE");

} else {

reply += F("FALSE");

}

String url = "http://" + WiFi.localIP().toString() + "/snapshot.jpg";

reply += "<p>Snapshot URL:<br> <a href='" + url + "'>" + url + "</a>";

url = "http://" + WiFi.localIP().toString() + "/mjpg";

reply += "<p>HTTP MJPEG URL:<br> <a href='" + url + "'>" + url + "</a>";

url = "rtsp://" + WiFi.localIP().toString() + ":554/mjpeg/1";

reply += "<p>RTSP MJPEG URL:<br> " + url;

url = "http://" + WiFi.localIP().toString() + "/_ac";

reply += "<p>WiFi settings page:<br> <a href='" + url + "'>" + url + "</a>";

reply += F("</body></html>");

server.send(200, "text/html", reply);

}

void handleNotFound()

{

String message = "Server is running!\n\n";

message += "URI: ";

message += server.uri();

message += "\nMethod: ";

message += (server.method() == HTTP_GET) ? "GET" : "POST";

message += "\nArguments: ";

message += server.args();

message += "\n";

server.send(200, "text/plain", message);

}

void setup(){

pinMode(LED_BUILTIN, OUTPUT); // pour LED

digitalWrite(LED_BUILTIN, LOW); // pour LED

WRITE_PERI_REG(RTC_CNTL_BROWN_OUT_REG, 0); //disable brownout detector

Serial.begin(115200);

// while (!Serial){;} // debug only

camera_config_t cconfig;

cconfig = esp32cam_aithinker_config;

if (psramFound()) {

cconfig.frame_size = RESOLUTION; // FRAMESIZE_ + QVGA|CIF|VGA|SVGA|XGA|SXGA|UXGA

cconfig.jpeg_quality = QUALITY;

cconfig.fb_count = 2;

} else {

if (RESOLUTION>FRAMESIZE_SVGA) {

cconfig.frame_size = FRAMESIZE_SVGA;

}

cconfig.jpeg_quality = 12;

cconfig.fb_count = 1;

}

if (PIN_FLASH_LED>-1){

pinMode(PIN_FLASH_LED, OUTPUT);

setflash(0);

}

cam.init(cconfig);

server.on("/", handle_root);

server.on("/mjpg", HTTP_GET, handle_jpg_stream);

server.on("/snapshot.jpg", HTTP_GET, handle_jpg);

Portal.onNotFound(handleNotFound);

acConfig.apid = "ESP-" + String((uint32_t)(ESP.getEfuseMac() >> 32), HEX);

acConfig.psk = "configesp";

// acConfig.apip = IPAddress(192,168,4,1);

acConfig.hostName = acConfig.apid;

acConfig.autoReconnect = true;

Portal.config(acConfig);

Portal.begin();

// POUR LED

serverasync.on("/relay/off", HTTP_ANY, [](AsyncWebServerRequest *request){

request->send(200, "text/plain", "ok");

digitalWrite(LED_BUILTIN, LOW);

});

serverasync.on("/relay/on", HTTP_ANY, [](AsyncWebServerRequest *request){

request->send(200, "text/plain","ok");

digitalWrite(LED_BUILTIN, HIGH);

});

serverasync.on("/relay/toggle", HTTP_ANY, [](AsyncWebServerRequest *request){

request->send(200, "text/plain","ok");

digitalWrite(LED_BUILTIN, !digitalRead(LED_BUILTIN));

});

serverasync.on("/relay", HTTP_GET, [](AsyncWebServerRequest *request){

request->send(200, "text/plain", String(digitalRead(LED_BUILTIN)));

});

serverasync.begin();

//

#ifdef ENABLE_RTSPSERVER

if (useRTSP) {

rtspServer.begin();

streamer = new OV2640Streamer(cam); // our streamer for UDP/TCP based RTP transport

}

#endif

}

void loop()

{

//server.handleClient();

Portal.handleClient();

#ifdef ENABLE_RTSPSERVER

if (useRTSP) {

uint32_t msecPerFrame = 100;

static uint32_t lastimage = millis();

// If we have an active client connection, just service that until gone

streamer->handleRequests(0); // we don't use a timeout here,

// instead we send only if we have new enough frames

uint32_t now = millis();

if (streamer->anySessions()) {

if (now > lastimage + msecPerFrame || now < lastimage) { // handle clock rollover

streamer->streamImage(now);

lastimage = now;

// check if we are overrunning our max frame rate

now = millis();

if (now > lastimage + msecPerFrame) {

printf("warning exceeding max frame rate of %d ms\n", now - lastimage);

}

}

}

WiFiClient rtspClient = rtspServer.accept();

if (rtspClient) {

Serial.print("client: ");

Serial.print(rtspClient.remoteIP());

Serial.println();

streamer->addSession(rtspClient);

}

}

#endif

}

Quelques détails:

- A la ligne 32, le webserver est paramétré sur le port 80

- A la ligne 33 le AsyncWebServer est paramétré sur le port 81

Les liens:

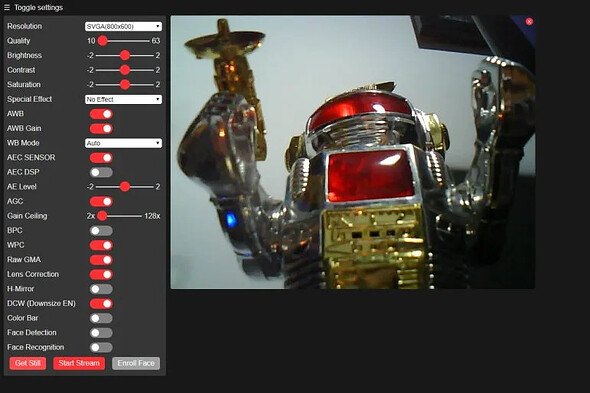

http://ip-esp32/mjpg: streaming

http://ip-esp/snapshot.jpg: l’image

http://ip-esp:81/relay/on: pour allumer la led

http://ip-esp:81/relay/off: pour éteindre la led

http://ip-esp:81/relay/toggle: pour allumer/eteindre de manière séquentielle

http://ip-esp:81/relay: état actuel de la led (sous forme binaire)

Pour le moment, j’ai découvert le asyncwebserver, qui permet de pouvoir gérer la led sans arrêter le flux video.

Le code n’est surement pas parfait (c’est la première fois que je me plonge dedans, et je me demande si le webserver async ne peut pas être utilisé aussi pour la vidéo, au lieu d’avoir les 2.